Orbital 0.33 - Landing page insights, improved query editor, and simpler deployments.

- Date

Orbital 0.33.0 - "Growing Pains" Edition

Orbital 0.33 is out - with lots (and lots) of small, incremental improvements to the features you already know and love.

Sure, we skipped 0.32 - but who hasn’t had an episode in early 30’s they forgot?

Because this release is all about beloved features growing, and squashing bug fixes, and because we made a bold choice shortly after Dry January finished to name each of this year’s releases after an 80’s sitcom, we’re proud to present Orbital 0.33 - The Growing Pains edition.

Highlights

- Improved UX: landing page and query editor improvements

- Orbital is simpler to deploy, as we’ve merged the Stream Server into Orbital

- Improved S3 connector

- Streaming queries observable over Http endpoints

- Avro support

- Lots of bug fixes

A new landing experience

We’ve completely redesigned the landing page and now surface insights into the health of your project’s connections, an at a glance overview of schema changes, as well as metrics on currently running services.

We’ll be adding more content in this area as well, to help get new users up to speed, and provide more experienced users with documentation and tutorials to improve their Orbital productivity. Watch this space!

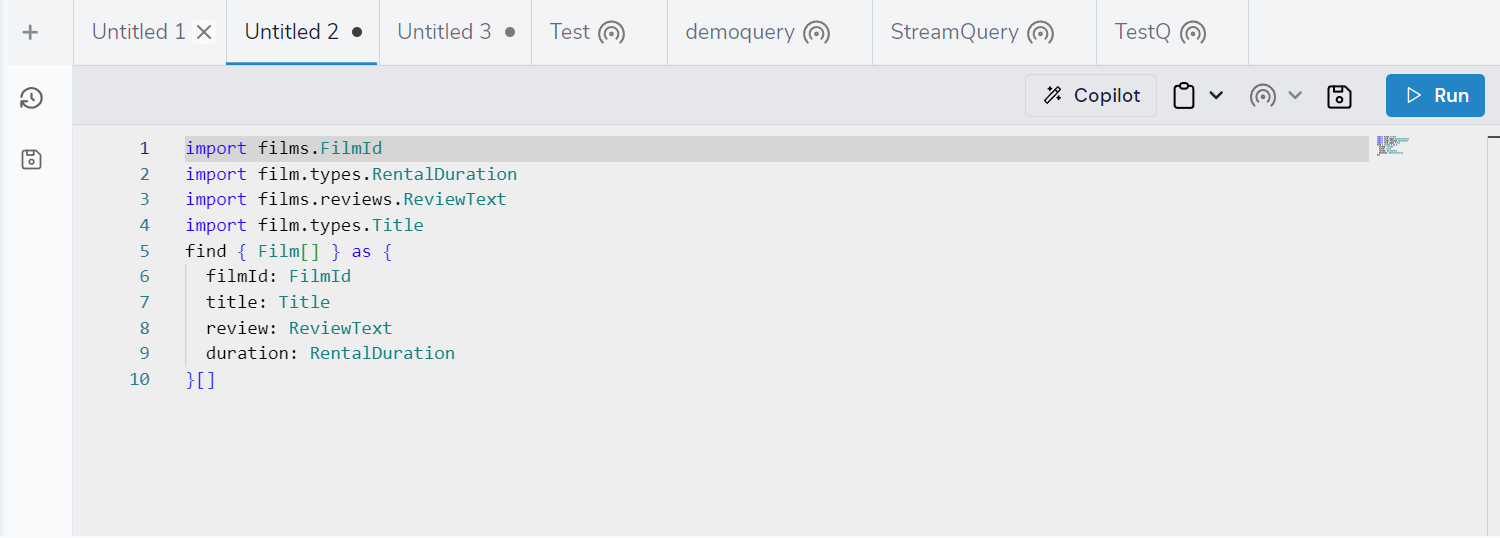

Improved Query Editor experience

You asked for it, and we listened - tabs are now part of the query editor experience!

Create as many queries as you like, safe in the knowledge that you can tweak and edit them in isolation, and run the query when you’re satisfied things are looking good.

Results are retained for each tab, allowing some serious multitasking.

We’ve also overhauled the query editor’s toolbar, allowing you to publish queries to endpoints, and remove them if they’ve already been published. Alongside that, you’re now able to see which queries have endpoints associated with them, launch the endpoint, or copy the endpoint URL or code for a cURL command.

We’re confident these UI additions further improve the developer experience, let us know what other improvements in this space you’d like to see.

Stream server is now folded into Orbital

The Stream Server historically was a standalone service that was responsible for executing long-running streaming queries.

It’s now folded directly into Orbital, simplifying the deployment significantly.

Improved S3 connector

Our S3 connector received a much-needed makeover.

When reading, we now support reading from either a single file, an entire bucket, or any type of wildcard glob pattern. Files on S3 are also now supported as a standard write target.

We also support reading and writing to S3 in any of Orbital’s supported formats.

This means you can now do things like query a database, enrich it against a series of APIs, and write the results to a csv file on an S3 bucket. Alternatively, you can read a CSV file from S3, split it, enrich each record and write the values to Kafka.

For example:

// Given a model of data stored on S3:

@Csv

model TradeSummary {

symbol : Symbol inherits String

open : OpenPrice inherits Decimal

high : HighPrice inherits Decimal

close : ClosePrice inherits Decimal

}

// Expose the S3 bucket:

@S3Service(connectionName = "MyAwsConnection")

service AwsBucketService {

@S3Operation(bucket = "MyTrades")

operation readBucket(filename:FilenamePattern = "trades.csv"):TradeSummary[]

}

// Expose some enrichment services:

service TradeVolumeApi {

@HttpOperation(url="https://myTradeService/volumes/{symbol}", method = "GET")

operation getTradeVolumes(@PathVariable("symbol") symbol : Symbol): TradeVolumes

}

// Expose our enriched data model:

model TradesWithVolumes {

symbol : Symbol

prices : {

open : OpenPrice

close : ClosePrice

}

volumes : {

volumes : TradeVolumes

}

}

// And a Kafka topic to write to:

service MyKafkaBroker {

@KafkaOperation(topic = "tradesWithVolumes")

write operation publishTrades(TradesWithVolumes):TradesWithVolumes

}

// Given the above, we can fetch from S3, transform against our

// enrichment API, and write the results to Kafka using the following

// query:

find { TradeSummary[] )

call MyKafkaBroker::publishTradesSee more examples in the docs for the S3 connector.

Kafka headers and message key are now available

You can now access the message key for a Kafka message directly in your model:

import com.orbitalhq.kafka.KafkaMessageKey

model Movie {

// The key from Kafka will be read into the id property

@KafkaMessageKey

id : MovieId inherits String

title : Title inherits String

}Similarly, Kafka message headers can now be provided using the @KafkaMessageMetadata annotation

More details are available in the updated Kafka documentation

Streaming queries are observable via Http endpoints

Orbital has supported saved streaming queries for some time. These run in the background doing things like consuming from a Kafka topic (amongst others), transforming, enriching and writing data.

Now, these same queries can be observed via HTTP endpoints - using either Server Sent Events or Websockets. Simply add an annotation to your query definition:

@HttpOperation(url = '/api/q/newReleases', method = 'GET')

query getNewReleaseAnnouncements {

stream { NewReleaseAnnouncement } as {

title : MovieTitle

reviewScore : ReviewScore

}[]

}These annotations can also be added by using the new publish endpoint feature detailed in the query editor improvements

This query would be available over Curl using

curl -X GET 'http://localhost:9022/api/q/newReleases' \

-H 'Accept: text/event-stream;charset-UTF-8'More details are available in the documentation for saved streaming queries.

Avro format

We’ve added support for Avro as a format - both reading and writing.

Additionally, we also support Confluent’s own, special, magical version of Avro, which injects a few additional bytes at the start … “magic bytes” — their words, not ours. Anyway, we support reading this, without a dependency on the Confluent schema server.

Find out more about Avro support in the Avro documentation

Batched writes for Mongo

Orbital can now batch upserts target to a Mongo Collections. See the documentation for details.

Batching mutations

Previously, mutations were run incrementally in parallel.

You can now optionally indicate that a mutation should be performed at the end of the query, after all projections have been completed. This is useful for targets like S3, where incremental writing isn’t supported.

More information and examples are available in our updated docs on mutations.

Other features:

Bug Fixes

Performance

There’s been a number of performance improvements across the stack:

- Fixed bottleneck when queries don’t use a projection in a mutation

- Fixed performance writing to Hazelcast maps

- Fixed performance issues with @Cache annotated queries

- A number of minor tweaks to the core query engine to improve performance

- We’ve fixed issues making it very slow to parse database metadata (when importing a database table)

Relative paths in workspace.conf

Some customers like to manually deploy their taxi projects onto the same box that Orbital is running on, and refer to the taxi projects using file paths (rather than pulling from a git repository).

In cases like this, it’s often convenient to declare your projects in workspace.conf with relative paths (relative to the workspace.conf file), rather than absolute paths. We’ve fixed a number of bugs that previously made this very hit-and-miss. Now, it’s all hits.

Pagination in query history

Previously, we only showed the first 100 results in query history. We now paginate these, so the full result set is available.

Websockets timing out

We fixed a number of issues with websockets timing out - this was particularly noticeable if Orbital was deployed in AWS behind an ALB, where the ALB would terminate websocket connections after a period of inactivity. We’ve added ping/pong on the websockets to prevent this.

Ability to remove projects pushed to Orbital

Often, a microservice will publish it’s project directly to Orbital. Previously, these weren’t removable using the UI. Now they are. That’s progress.

Fixed cast expressions on a field

This used to throw an error. Now it doesn’t.

find {

long: Long by (Long) Foo

}Projecting a scoped variable to a predefined array type

This also used to throw an error. We fixed that too.

find { FilmCatalog } as (films:Film[]) -> Movie[]Lots of bug fixes with Git based projects

We squashed lots of bugs related to workspaces that were pulled from git, and projects within workspaces that were pulled from git. This now behaves much much better.

SQS Subscriber fixes

We have fixed an SQS subscription that might result in corresponding poll operations interrupted after the first successful poll. Additional configuration parameters to fine tune AWS SQS poll operations were also added, see the docs for the details.

Changes to workspace.conf are now live reloaded

This bug manifested in lots of little, but rather annoying changes.

We now automatically update the UI after changes are made to the workspace.conf file (such as adding and removing projects).

Other bug fixes:

- We’ve addressed issues with Logout not working as expected on Cognito

- Importing a Protobuf spec from the UI now works

- Saving a query no longer breaks the formatting

- Language server now reconnects automatically after disconnect

- Cancelling a streaming query from the query history view works again

- Fixed a bug where JSON results viewer would show type hints over and over and over

- Download query results as CSV works again

- Browser would crash when running queries returning large volumes of results

- A misconfigured workspace.conf file could prevent the server from starting